- Product

Product

MATRIXX PlatformCommercial Benefits

Rapid Commercial InnovationCustomer-Centric SolutionsUnified, Real-Time RevenueDynamic Operational FlexibilityTechnical Benefits

High Performance Core5G CHF | CCS ArchitectureAPI-FirstClick-Not-CodeTrue Cloud NativeUnified Commerce- Opportunities

Market Segments

ConsumersSmall and Medium EnterpriseLarge EnterpriseGovernment NetworksPrivate Cellular NetworksWholesale- Customers

Overview

Success HighlightsBusiness Case Studies

DISH - Standalone 5GLiberty Latin America - Group TransformationOrange Romania - Rapid Brand InnovationTelefónica - Converged ChargingTPG Telecom - Multi-Brand StrategySuccess Stories

iD MobileOne NZ - ConsumerOne NZ - EnterpriseOrange PolandStarHubSwisscomTata CommunicationsTelstra- Partners

Be A Partner

Partner Program OverviewPartner Resources

Education & TrainingStrategic Initiatives

Blue Planet | Dynamic Monetization of 5GGoogle | Confidential ComputeIBM | Telco Cloud PartnershipMicrosoft and Blue Planet | Monetize 5G ExperiencesRed Hat OpenShift | Hybrid CloudSalesforce | Digital ExperiencesPartner Case Studies

AWS CI/CD Pipeline- Resources

Events & Webinars

Register ITW 2024: Join CTO Marc Price for a panel discussion on AI disruption, May 15thWebinars On-Demand- Company

Edge: The End of the Beginning?

Paul GainhamDec 12, 2022 EDGESimilar to the “I’m Spartacus” scene from the film of the same name, the edge is suddenly popping up everywhere. Whether it’s cell sites, telephone exchanges, old computer rooms or new regional data centers, it’s difficult to go anywhere without tripping over the edge! (Pardon the pun.)

The cynic in me would suggest that this sounds like a solution looking for a problem. Call enough places the edge and you’re bound to be right eventually. Indeed, when you consider that “the edge” as a concept has been around for 10+ years with spotty uptake, cynics could be forgiven for consigning it to the hype bin and moving on.

But is that really the true picture?

Actually, I don’t think it is. Given the combination of a new breed of applications, the huge ramp in access technology capabilities — whether in mobile, fixed or Wi-Fi — and the sheer investment focus from hyperscalers and telcos alike, the real growth opportunity in edge is still ahead of us.

Conventional telecommunications network design wisdom was anchored around the principle of “centralize what you can, distribute what you must.” Because scaling at the edge is more expensive and potentially more difficult to manage, it stands to reason that it should only be undertaken if necessary. While technologies such as cloud and cloud native have eased that operational burden somewhat, as a principle, the old wisdom still has merit.

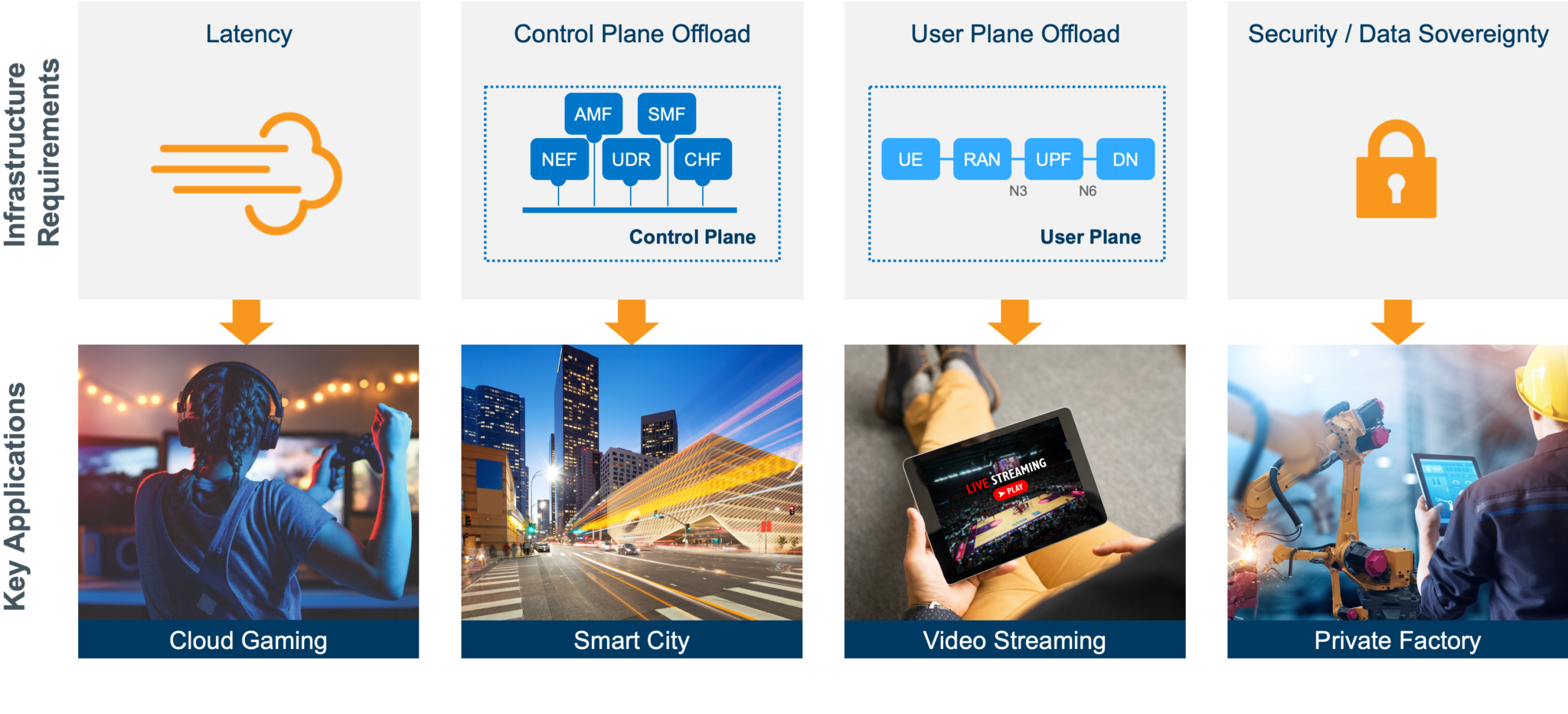

Put another way, what happens at the edge needs to happen at the edge. So, what needs to happen at the edge? Well, it’s either apps that need true low latency (sub ~30ms round-trip latency as opposed to 100-150ms), offloading network traffic (e.g., content delivery network caching), localized security where an app and/or data needs to remain on site at a physically identifiable location or offloading control plane traffic where the number of devices is excessive and requires a distribution of key control plane functions (such as AMF, SMF and UPF in 5G) to prevent overload and outage.

Key Infrastructure and Application Requirements

If we consider the last period of edge deployments as the appetizer and view the stage we’re entering now as the main course, what’s more côte de boeuf than brisket that justifies such an incremental investment? Currently, I see seven edge deployment models that stand up to scrutiny:

If we consider the last period of edge deployments as the appetizer and view the stage we’re entering now as the main course, what’s more côte de boeuf than brisket that justifies such an incremental investment? Currently, I see seven edge deployment models that stand up to scrutiny:Offload Edge – Here, key control plane functions are distributed to support high-volume, highly interactive IoT networks, such as smart cities or densely populated IoT areas. Localized Internet breakout may be deployed as part of this as well. Alternately, this could be a user-plane offload edge such as might be used for content delivery network caching.

Satellite/Non-Terrestrial Networks (NTN) Edge – This deployment is designed to minimize inherent satellite latency and place key apps as close, latency wise, to the end user as possible without adding further terrestrial network latency.

Federated Edge – These locations require closely coupled application synchronization between a primary application and secondary subordinates to ensure equal performance characteristics. Online gaming is a good example of a federated edge application.

Private Edge – For some locations, data security is paramount. A private edge could be a standalone private cellular network, a fixed/Wi-Fi network or served by a fixed VPN or slice off a PLMN. Automated factory production processes that involve artificial intelligence or machine-learning apps where confidentiality and security of intellectual property are needed are good examples of this.

Neutral Host Network Edge – This model supports deployments where superior indoor cellular network performance is needed and a clean, neutral architecture (e.g., one owner with potentially multiple mobile networks using the same edge) is preferred, such as large office buildings, airports, shipping ports and sports stadiums.

SASE/Branch Offload Edge – These locations are typically part of an enterprise VPN, where in-house or managed applications (e.g., security, UCaaS, collaboration, VNF’s etc.) are located at the branch location or a closely coupled cloud location in order to improve performance and reduce network bandwidth costs.

Dynamic Edge – This is probably the most interesting edge deployment model. Here, dynamic traffic management, premium offers based on geo-location, dynamic application loading based on VIP customer needs and dynamic pricing all happen. The dynamic edge needs to provide an experience appropriate to a wide range of customer demographics and needs in both B2C and B2B segments.

As you can see, there’s a bright future for the edge, but it does beg one question: If the premise I outlined earlier about only deploying at the edge if it’s needed and of value, how can telecommunications network operators best monetize that value?

This is where the industry is at a crossroads.

Edge applications, by their very nature, are becoming more dynamic, transient and on-demand over time. Applying 20-year-old postpaid billing models — or messages, megabytes and minutes pricing plans — to them is futile.

If network usage in its broadest sense was the monetization currency of telecommunications over the last 20 years, the next 20 years will be defined by the currency of value if the cycle of commoditization is to be broken and the business model of telecommunications is to return to health.

Models such as Network-as-a-Service, outcome-based pricing, congestion-based pricing, federated application pricing, geo-location, dynamic subscriptions and vertical application pricing are all good examples of value-based pricing at work. When matched with the key delivery principles of digital self-help, flexible payment terms, digital marketplaces and real-time offer agility, the edge menu starts to look very tasty indeed.

Learn more about the MATRIXX Software approach to dynamic monetization.

Pin It on Pinterest

- Opportunities